Here are some “buckets” for making sense of why the news is in crisis: addiction, economics, bad actors and known bugs

I’ve been studying news and digital media since 2002 and the news has been in crisis for those past 16 years, possibly longer. And not just the handwringing “Oh no, citizens are producing their own news, what will happen to journalism as a profession?” crisis. No, we’re more than a decade into the “We can’t afford to pay for the news, what happens now?” crisis, and no closer to a solution.

And now we’ve got a new pack of crises spawned in 2016. The “Macedonian teenagers are destroying democracy with fake news” crisis. The “We don’t understand the Americans who elected Trump” crisis. The “Russian bots control what’s news” crisis.

These crises are real, but some are more real than others. I’ve spent part of the last year meeting with the Knight Commission on Media, Trust and Democracy trying to learn the shape of these challenges and what might be done to address them. In the process of helping out as a consultant to the commission, I’m finding myself starting to become comfortable with four buckets these crises often fit into. I’m a believer that how you think about a problem shapes what solutions you propose, so picturing four crises feels like an improvement over a sea of troubles.

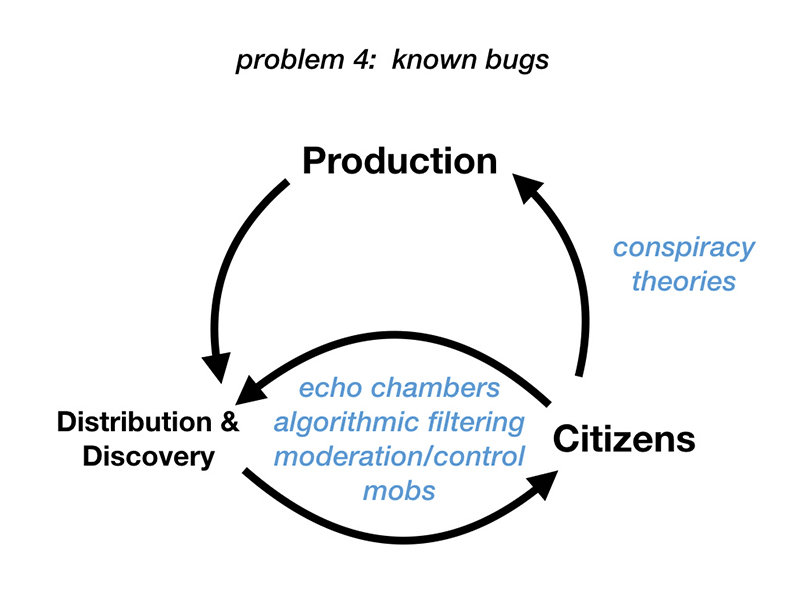

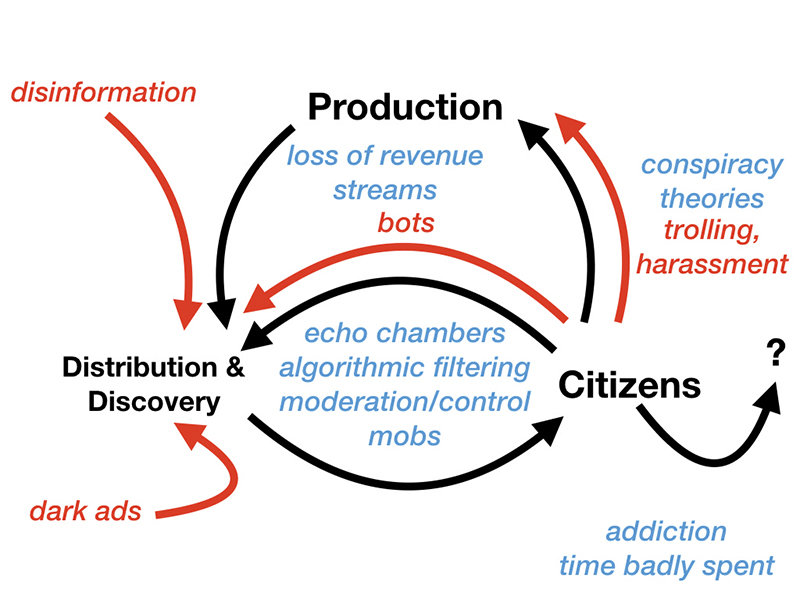

To understand these four crises — addiction, economics, bad actors and known bugs — we have to look at how media has changed shape between the 1990s and today. A system that used to be linear and fairly predictable now features feedback loops that lead to complex and unintended consequences. The landscape that is emerging may be one no one completely understands, but it’s one that can be exploited even if not fully understood.

What the Media Used to Look Like

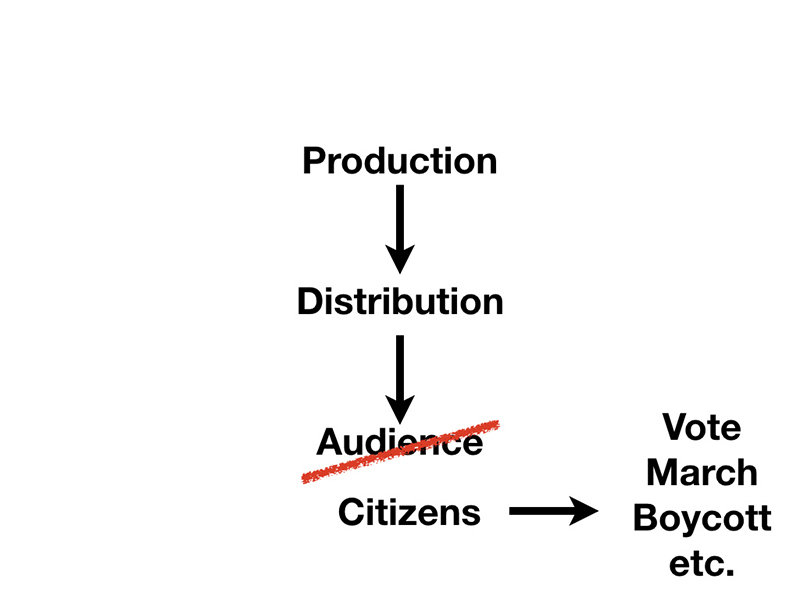

Media used to look something like this: Production-->Distribution-->Audience.

Producers made media — they wrote stories, filmed news segments — and handed them off to distributors to share with audiences locally or around the world. Often the distributors and producers worked for the same company: the same company that wrote the New York Times ensured that it got printed, bundled and delivered to people’s doorsteps. The flow of content was primarily linear and unidirectional — yes, people wrote letters to the editor and some may have influenced later coverage, but the influences were far sparser in more contemporary models.

The lifecycle of content wouldn’t be so worthy of public attention except for the civic conviction that news helps us make informed decisions about our public lives. News allows us to be informed voters, so we know when to call our representatives to express our opinions, to know when we’re so outraged that we should take to the streets in protest or boycott a company. Journalism gains much of its power from the realization that audiences are also citizens. When investigative journalists uncover corruption at city hall, their power comes from the idea that thousands of citizens are reading and will vote the bums out unless change is made.

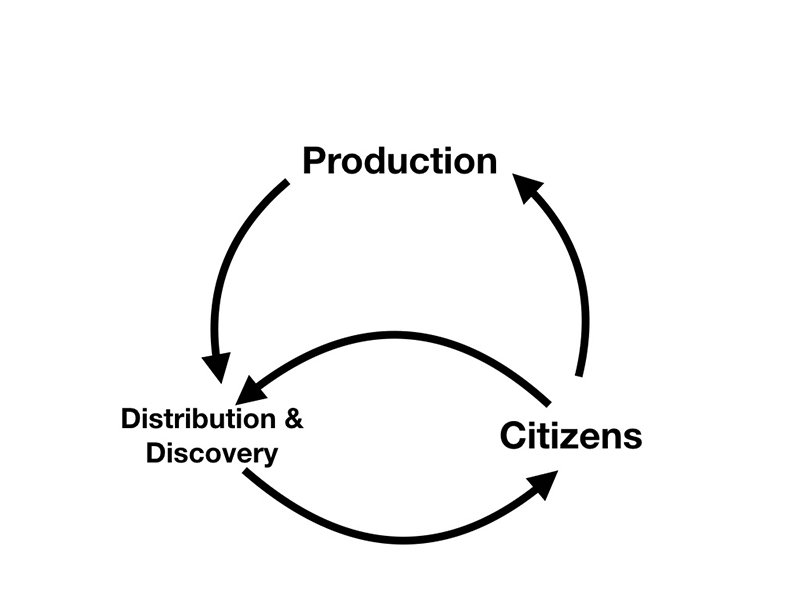

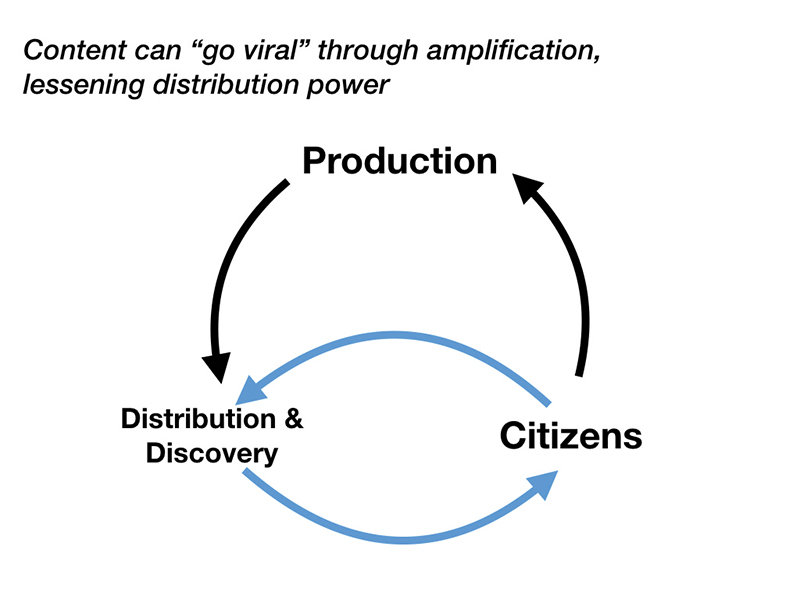

In modeling the contemporary media ecosystem, it’s important to understand that citizens have a whole new set of options beyond voting or marching. Citizens amplify content they like, sending signals back to distributor that they’d like more of the same. They create content that reacts to what’s been presented to them, entering the cycle as producers. As a result, they’ve got vastly more influence over the media agenda than our earlier model.

This is only one change producers face in this new model. Most no longer control distribution as tightly as they did in earlier times — unlike newspapers whose companies housed writers and editors, the presses and the delivery trucks in the same building, producers are now one link in a more complex chain. Distribution of content on the internet is free, and attention is scarce, so the power goes not to those who have the presses or the broadcast towers, but to those who control vast audiences: Facebook, Google and the other major media platforms. Anthea Watson Strong, who currently works on local news at Facebook, suggests that people think of these tools as discovery services, more than distributors, because their real power is helping people discover new content, either from their friends or from vast databases of recommendations.

These discovery services are attention brokers, making their money from siphoning a fraction of attention off and selling it to advertisers. Unlike the broadcast towers and printing presses, these services are independent of the content producers, and they’re far less cooperative about sharing revenues than their predecessors were. Without them, though, many news producers would lose their audiences entirely.

“Buckets of Problems”

The first bucket of problems comes from these challenges to the business model: how do citizens continue to pay for high-quality journalism when attention and advertising dollars are controlled by discovery engines? There’s an understandable and legitimate fear that the “viral cycle” rewards certain types of engaging, popular content at the expense of important though less entertaining content. What’s especially at risk is accountability journalism, especially at local levels, which was threatened even in pre-digital days. Only a few dozen people might read the minutes of last night’s meeting at City Hall, but it matters immensely that a seasoned political reporter is scanning those notes carefully, looking for possible scandals or abuse and threatening to splash them on the front page, if officials don’t behave ethically. It’s difficult to know how this essential content is supported in an economy where attention is the scarce commodity— even if news organizations can pay the reporter, her power comes from having an audience to reach. Without high quality journalism, bad deeds go unpunished, power is not balanced with accountability and citizens remain uninformed. One of the disturbing aspects of this situation is that democracy requires not only high quality journalism, but an audience of citizens to consume it. Our problem is not just paying for stories—it may include paying for these stories to reach the audiences who need them if journalism can’t win a battle for attention in our discovery engines/attention markets.

A second bucket of problems comes from the addictive nature of social media. It’s fun to play the game of discovery and amplification, and millions of people are finding it hard to put down their phones long enough to sleep. Tristan Harris has a theory on why this is: these tools have been designed by people who’ve studied gambling and other addictive phenomena, and they’re literally building tools designed to get us hooked on social media. As Tristan and others raise red flags, we are seeing indications that those in the know understand how powerful these tools can be — Silicon Valley executives have begun embracing practices of mindfulness, speaking of “time well spent” and ordering their own children to put their phones down.

A nation of social media addicts may not be the most fertile field for participatory democracy. Indeed, it may be precisely the environment in which misinformation and mob behavior spreads like an epidemic in a crowded city. But questions remain about how many of these concerns are best understood as the moral panic that often takes hold with the adoption of new technologies, and what problems might genuinely affect how we think about citizenship today.

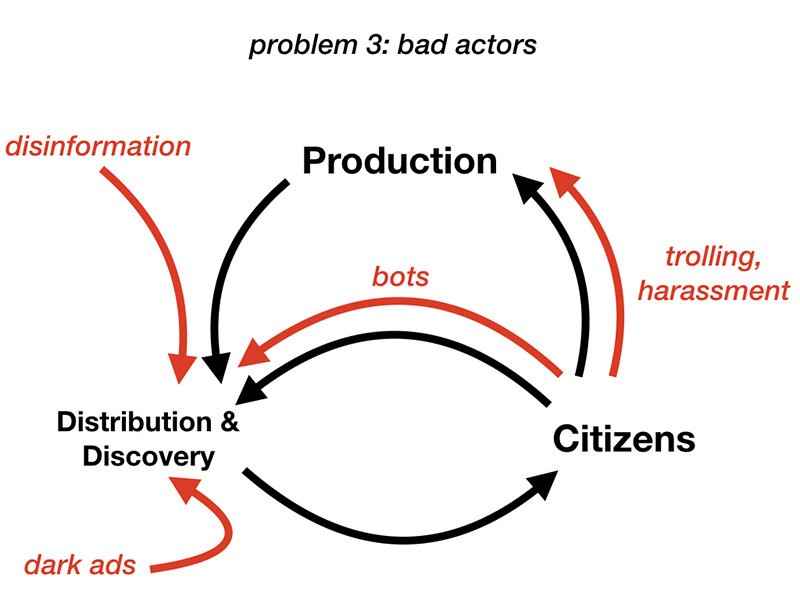

To understand the third and fourth buckets of problems, let me return to the model of new media I’m proposing. While it’s likely that no one has a full understanding of the dynamics of this ecosystem and its feedback loops, bad actors are finding ways to inject ideas into the conversation by targeting vulnerable points in the system. Networks of bots amplify stories in ways that make them appear popular with thousands of users, sending false signals to distribution engines that make those stories and topics unduly popular. Content created to silence other voices online through trolling and harassment feeds into the system in much the same way as legitimate comments and reactions. Similarly, disinformation produced either to deceive or to divert attention for profit enters the system disguised as legitimate news. Dark ads allow narrowly targeted content to influence a selected audience without “outsiders” ever seeing it.

If there’s good news about bad actors, it’s that the social media ecosystem would function just fine without them. It’s easy to imagine Facebook or Twitter without most kinds of bots, and platforms are already showing an increased interest in countering harassment and blocking some kinds of disinformation. What’s more disturbing is the bad behaviors that may simply be part and parcel of the new media environment.

Even when all participants in a system are acting in good faith, ugly behaviors still emerge. Whether it’s due to algorithms (as Eli Pariser believes) or simply due to homophily and the cognitive dangers from birds of a feather flocking together (as Cass Sunstein and I, separately, have argued), echo chambers and filter bubbles seem to form online, surrounding us with content that tends to polarize us. Some of these isolated conversations seem to gravitate towards the paranoid, leading to the spread of conspiracy theories and other unreliable content. Maintaining these communities requires some degree of control, which leads to complaints about the biases of algorithmic filters and control asserted by moderators. And even with control, mobs form and punish what they perceive to be bad behavior.

What’s toughest about this fourth bucket of problems, the known bugs, is that they seem to appear even when everyone’s behaving well within a media ecosystem. Much as I dislike what Breitbart has to say, they’re producing content for audiences that want to hear it. That center-right audiences gravitate — or are algorithmically nudged — to the far right doesn’t appear to be the result of anyone’s sinister plan. Worse, it’s simply an emergent property of a complex system, which makes it harder to determine who’s to blame and who’s responsible for fixing it.

Taken together, even this minimal model of the problems raised for media and democracy seems pretty daunting. But understanding the problems as buckets of interrelated challenges that can be solved without unraveling the entire Gordian knot makes the situation more tractable.

Financing the News

The problem of financing the news is hardly new, and it’s unlikely that my friends on the Knight Commission are going to come up with something radically better than what the collective wisdom of online and offline publishers, journalism and business schools have in the past decade. I think it’s unlikely that there’s a solution to this set of problems without insisting on the necessity of news for participation in a democracy, which opens options too seldom considered in our country, where market solutions are always preferred. News may be too important to leave to the whims of the market. Whether that means financing public media providers that are positioned to give us the essential facts of the world we live in, or building a robust set of membership models that allow a small set of subscribers to make critical information available to wider audiences, there are solutions to the problem of financing the news. Unfortunately, these solutions are rarely popular because they’re expensive and hard to sell in the U.S., where they run counter to conventional thinking about markets and speech.

Harder is the problem of getting people to pay attention to the high quality news that’s already produced. This is the sort of problem that will require news publishers to work closely with discovery engines, and might open intriguing possibilities, like social media systems supported in part by public media funds, designed to help people mix opinions and perspectives from friends with a backbone of information from trusted sources.

Unlike the problem of financing media, many of the concerns about the addictive nature of social media are new. There’s a need for psychological research to figure out how deep public concerns should be, given a long history of public overreaction to the dangers of new media technologies. It’s likely that the field also needs strong consumer protection groups that can demand that technology platforms take our well-being seriously. And if there’s evidence that companies are building these tools with an addiction model in mind, regulating how these companies operate seems like a very reasonable step.

While bad actors, the third set of problems outlined here, have provoked the most dialog since the 2016 election, they are the easiest problem to tackle, as platforms and consumers have aligned interests. Bots aren’t good for Twitter—they inflate user numbers in the short term, but lead investors to worry about whether the site’s users are real—nor are they good for users of the platform. Companies like Facebook, Google and Twitter will need to work closely with scientists in academia, opening up data sets and collaborating closely so these problems can be tackled—Twitter’s move towards collaboration on healthy media ecosystems is a step in the right direction, and if done well, the expertise of academics and activists who are already fighting harassment online can be incorporated into key systems. Platforms should be able to tackle bots with machine learning systems, as their signatures are highly recognizable. And emerging movements towards transparency in advertising are steps in the right direction in combating dark ads and other forms of paid influence.

What’s most needed here is a way for platforms and their critics to share data, tools and methods in a way that’s less threatening to both sides…a problem that has become much harder with the fallout of the Cambridge Analytica scandal. Right now, academics and activists can’t do their best work on these platforms without data they need, and platforms are understandably afraid of being excoriated in the press for their failings. Everyone needs a way for these parties to work together with some mutual understanding, tolerance and care, with a joint goal of making bad actors less powerful. Similarly, everyone involved with these debates also needs— immediately — to understand better how powerful these bad actors actually are. We may be giving this set of problems far more attention than it deserves, simply because the notion of Russian manipulation of elections or Macedonians marketing to ethnic nationalists is so fascinating. In truth, these may not be the biggest problems around media and democracy.

It’s the fourth bucket of problems that keep me up at night. While bots are bad for Twitter and Facebook, polarization turns out to be a great business model—it leads to engaged and passionate users, who are good for profits and bad for democracy. The platform companies simply may not have an appetite to take on “known bugs” problems, both because these bugs are hard to fix, and because they may be bugs for democracy rather than for these businesses. Here the best fixes may be environmental. Rather than tempting or threatening (through regulation, perhaps) Facebook to fix the polarization of our political system, we need to help build a climate that encourages new rival platforms to take on these challenges. For starters, we’d look at policies that required existing platforms to allow users to export their data and move it to new platforms, and to bridge between them so users can try other ways to interact online.

Having a clearer shape of a problem is not the same thing as having solutions. It’s important if only so we don’t collapse in the face of a task that often seems insurmountable: making sense of how the current media environment prepares citizens to be effective civic actors.

Ethan Zuckerman is director of the Center for Civic Media at MIT and an associate professor at MIT's Media Lab. His research focuses on issues of internet freedom, civic engagement through digital tools and international connections through media. This edited article originally appeared on the Knight Commission on Trust, Media and Democracy website.

Comments

No comments on this item Please log in to comment by clicking here