For anyone in the business of news, Artificial Intelligence (AI) is one of those topics that elicits emotions of equal parts exhilaration and despair. AI shows groundbreaking promise in scientific fields and medicine, and Big Tech is keenly focused on how to further develop its powers.

During a guest appearance on the podcast “In Good Company with Nicolai Tangen,” former Microsoft chief Bill Gates hyped AI: “[AI] is the one I’ve been thinking about my whole life. … It’s actually surprised me that we have this acceleration. AI will be the biggest thing in this decade, I would say.”

Google is already deep in AI, with technologies like Google Assistant, Google Translate and its chatbot, Bard. Google and Alphabet CEO Sundar Pichai began a Feb. 6 blog: “AI is the most profound technology we are working on today.”

Later that same month, Meta Founder and CEO Mark Zuckerberg revealed that his company plans to “turbocharge” artificial intelligence R&D.

Defining AI

A lecture on digital forensics, delivered by Claire Wardle, Ph.D. at the Columbia Journalism School, fascinated Aimee Rinehart and led her to her current role at The Associated Press (AP) today. She’s the program manager for local news AI strategy and works in concert with AI Product Manager Ernest Kung.

“Our first order of business was to figure out where our local newsrooms were, in terms of adopting technology — technology that includes automation and artificial intelligence," Rinehart said. Nearly 200 local news leaders from every U.S. state responded to the AP survey.

“We got a lot of insight, and basically, we learned that local newsrooms desperately need automation — not necessarily AI — and that the smallest newsrooms could benefit the most from these technologies,” Rinehart suggested.

Sometimes, the terms AI and automation are used interchangeably, but there’s an important distinction, Rinehart noted.

“Automation is really just setting up rules, like with your email inbox. If somebody from work emails you, it goes to one bucket; if someone outside of work emails you, it goes to another, and then you’ve got your spam. Those are rules put into place to help organize and sort things. That's a basic example of automation,” Rinehart explained. “An AI version of that would be if I trained my inbox to know that everyone with an @ap email address is routed to a folder, and it triggers that message to be added to a planning document. It’s artificial intelligence that can take a leap, if you will, based on what the rules are and based on training. It can determine through probability what the next logical step, answer, or next word should be.”

At AP, automated content generation has been in use since 2014, Rinehart estimated, when the AP started using Natural Language Generation (NLG) to produce earnings reports.

“Natural Language Generation is what I’d call v1.0 of artificial intelligence,” she said.

“With [NLG], you have to have very precise and consistent inputs, and where do you get that? You get it from a spreadsheet. … Generative AI kind of takes that spreadsheet and explodes it,” she continued. “Generative AI is really pulling ‘out of thin air’ — based on probability and its training — what comes next, whether that’s a word or some other type of output. It’s really exciting, incredibly unpredictable, and unsettling for journalists.”

As part of the AP Local News AI Initiative, AP and local newsroom partners are using GPT-3 technology — for example, to report on public safety incidents at the Brainerd Dispatch in Minnesota. At KSAT-TV in San Antonio, they’re automating video transcription and summary stories.

Other high-profile publishers — Wired, BuzzFeed, Bloomberg, The Washington Post and The Guardian — are now using AI to some extent. The Financial Times hired Madhumita Murgia as its first artificial intelligence editor, and “Chief AI Officer” is an emerging C-suite title.

AI seems everywhere, all at once.

Jessica Davis began her career as a reporter and moved into audience roles. Last fall, she became Gannett’s senior director, data initiatives and news automation. During a video call, Davis explained to E&P how USA TODAY Network titles now leverage NLG, including for “Localizer,” an initiative to derive local context from national data. USA TODAY Network journalists have produced real estate trend pieces thanks to a data partnership with Realtor.com. They’ve used National Weather Service data for hurricane trackers. They tracked COVID data and produced weekly stories about transmission rates. And they’ve covered unemployment, comparing national and state stats.

The key is accessing reliable data and then “cleaning it” through “good old-fashioned data journalism,” Davis said.

As a best practice, Gannett includes a disclaimer on any stories compiled with the help of automation. But every story still carries a byline of the journalist behind it.

“We believe the processes and transparency around this are really important for quality, accuracy and our standards,” Davis said.

“Here, it’s humans templating stories and using structured data to automate content, so it’s very human-driven, with a big lift from the technology. … There is still a human in the loop. Human and loop are a really important part of this,” she said.

Rise of the chatbot

Monmouth University Polling Institute reported in February about the public’s opinions of AI. The survey revealed 60% were familiar with OpenAI’s ChatGPT, and 72% said they expected AI to produce news content in the future. However, 78% said that’s a bad idea.

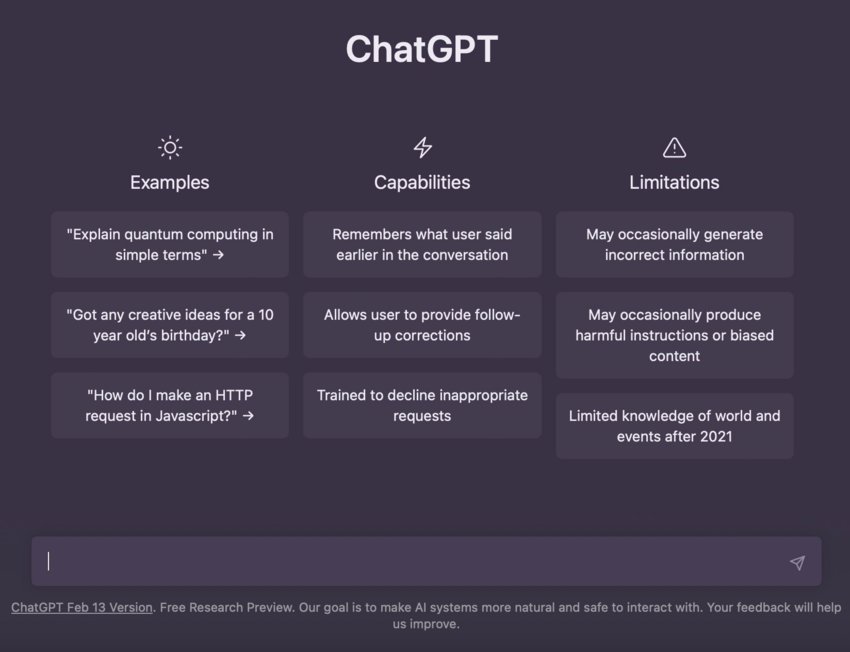

ChatGPT is fun and wildly popular. In March, OpenAI announced GPT-4 (Generative Pretrained Transformer 4), making it available to paid ChatGPT Plus subscribers and developers.

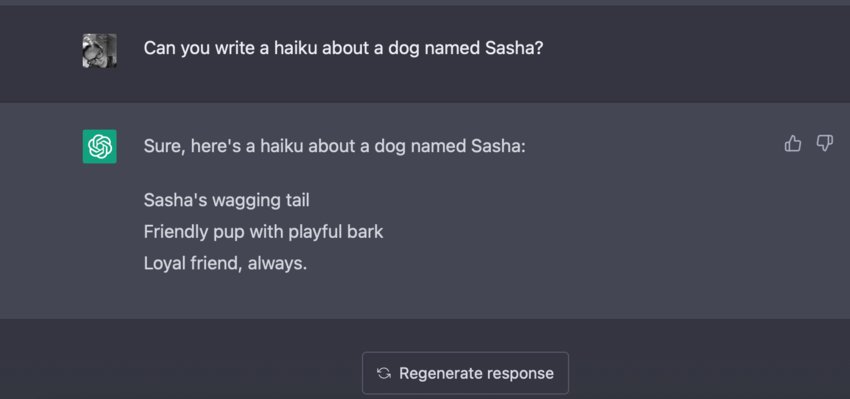

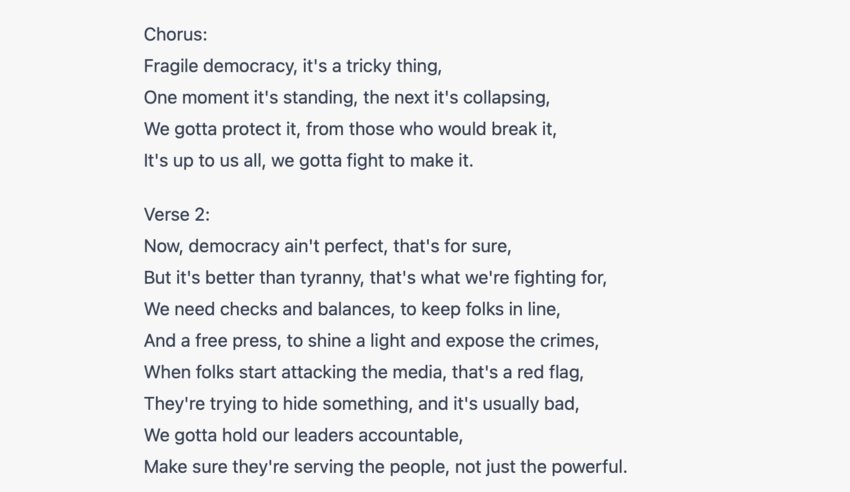

While writing this article, we put the free ChatGPT version through its paces, challenging it to write a Haiku about a dog; a sonnet about spaghetti; a rap song about democracy’s fragility; and a brief explainer on U.S. news deserts — fluff assignments.

But the technology has a dark side, The New York Times’ Technology Columnist Kevin Roose discovered during a now notorious conversation with Microsoft Bing’s ChatGPT chatbot. Over the course of hours, the AI expressed anger, snark, boredom, affection, a desire to be alive — and a secret identity — when prompted by Roose’s probing questions. He told readers it left him feeling “deeply unsettled, even frightened.”

Emily Bell, director of the Tow Center for Digital Journalism at Columbia University’s Graduate School of Journalism, penned a March op-ed for The Guardian, in which she tamped down some of the resulting hysteria, pointing out that it’s not actually possible for chatbots to feel emotions. The greater concern about AI tools is that they “have absolutely no commitment to truth,” she suggested.

The input can be inaccurate or nefarious; the output can be skewed, biased, false and enduring.

“Just think how rapidly a ChatGPT user could flood the internet with fake news stories that appear to have been written by humans,” Bell wrote.

“The fear is profound on this,” the AP’s Aimee Rinehart concurred. Still, she said that ChatGPT is compelling to journalists for three reasons: It’s cheap, fast and (can be) helpful.

“Is it accurate? Sometimes,” Rinehart said. “We would never say, ‘go straight to press,’ with something it created. But for small local newsrooms or news outlets that don’t have a marketing team, ChatGPT could be very useful.”

Rinehart stressed that that will largely depend on how the tool is used. Rather than simply asking a chatbot questions, it’s more productive to feed it first — for example, inputting an article you’ve written and asking it to create a Twitter thread about it, summarize it or suggest some headlines. E&P asked ChatGPT for headline suggestions for this story, but after several earnest tries, we wrote our own.

E&P spoke with Jay Allred and Evan Ryan by video conference about LedeAI, an automation tool that enables news outlets to report on high school sports. Allred is the president of Source Media Properties, which developed LedeAI, and Evan Ryan is LedeAI’s co-founder and CTO. They credit The Atlanta Journal-Constitution, Lee Newspapers and Forum Communications among users.

Allred and Ryan decided to focus this technology on high school sports because the dataset is available and accurate. And it’s a huge market. “There are about 7,000 high school football games alone every Friday night in America. That means that there are 14,000 high schools playing football every Friday, and 14,000 little tiny audiences that care deeply about those young men and women on the field,” Allred said. “The applications in the news industry where fully automated AI can be trustworthy and accurate — and really, truly helpful — are limited right now.”

Data is critical to all this. Bad input begets bad output, meaning news publishers must procure reliable datasets. That’s narrowed the coverage scope to things like sports, weather, financial markets data and real estate.

But just because you can produce AI-generated content doesn’t necessarily mean your audience wants it. Rinehart noted that AP previously automated stories about minor league baseball scores. They weren’t popular with readers, but it was an inexpensive trial.

“That’s why I’m so excited about Generative AI. It’s absolutely attainable,” Rinehart said. “It’s similar to what the web and social media have done, which is to democratize the flow of information.”

AI comes to AV

AI is innovating photography, and audio/video production, too.

OpenAI’s DALL-E 2 can create images and artwork from descriptive inputs. The AI photo generator ThisPersonDoesNotExist.com randomly creates images of fabricated people.

This year, Podcastle Software integrated AI technology into its platform with “Revoice,” a text-to-audio editing feature. OpenAI unveiled Whisper, an open-source speech transcription app that can output up to 90 languages.

R&D in AI is well-funded and energized. But imagining the minefield AI presents for news publishers is not difficult. Concerns about copyright, ethics, transparency, plagiarism and libel abound.

Dor Leitman is senior vice president of product and R&D at Connatix, a video platform developer. The company’s end-to-end solution enables publishers to automate video production and monetize that content. They employ over 100 developers working on this mission, tapping AI to create personalized video content.

Leitman is keeping watch on AI-related legal challenges ahead.

“What data is being used to train the models that generate images and video,” he said. “Who owns the media we create? Is it the artists whose work was used to train the model, for example? Is it the AI company, like OpenAI, or is me, the guy who just ‘wrote’ and published a poem?”

Finally, there’s the uncertainty about AI’s impact on labor.

Gannett’s Jessica Davis sees automation as a way to enhance journalism. “We don’t use [NLG] automation tools to replace reporters,” she said. “The AI tools can help us fill gaps and free up journalists to pursue things that can’t be automated.”

After all, AI can’t be empathetic during a crime victim interview. It’ll never be the recognizable face of a local news reporter out in the community.

But it would be foolish to think AI isn’t going to impact hiring and retention.

AP’s Aimee Rinehart had a pragmatic take: “There’s no doubt that, if you work in words or images, there will be changes coming — in terms of how your job is done, in terms of how businesses are going to allocate resources for those jobs. And I do mean job cuts. I’m not here to say or pretend there won’t be change. There’s going to be change. However, I’m most optimistic about how local newsrooms can take advantage of these technologies with the people who remain in the newsroom.”

Rinehart added, “Anywhere words or images touch down will be impacted by Generative AI. AP produces 3,000 images a day and 2,000 articles a day, so you know it will change an organization like AP profoundly — and across every department. It's not just the news departments. It's marketing, legal and the revenue team. Every job is going to be affected by this.”

Gretchen A. Peck is a contributing editor to Editor & Publisher. She’s reported for E&P since 2010 and welcomes comments at gretchenapeck@gmail.com.

Gretchen A. Peck is a contributing editor to Editor & Publisher. She’s reported for E&P since 2010 and welcomes comments at gretchenapeck@gmail.com.

Comments

No comments on this item Please log in to comment by clicking here